These pages contain basic concepts and details to make optimal use of TU Delft’s DAIC. Alternatively, you might with to jump to the Quickstart or Tutorials for more thematic content.

This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: About DAIC

- 2: Policies & Usage guidelines

- 3: System specifications

- 3.1: Login Nodes

- 3.2: Compute nodes

- 3.3: Storage

- 3.4: Scheduler

- 3.5: Cluster comparison

- 4: User manual

- 4.1: Connecting to DAIC

- 4.2: Data management & transfer

- 4.3: Software

- 4.3.1: Operating system

- 4.3.2: Available software

- 4.3.3: Modules

- 4.3.4: Installing software

- 4.3.5: Containerization

- 4.4: Job submission

- 4.4.1: Basics of Slurm jobs

- 4.4.2: Priorities, Partitions, Quality of Service & Reservations

- 4.4.3: Advanced Slurm jobs

- 4.4.4: Kerberos

- 4.5: Best practices

- 4.6: Handy commands on DAIC

1 - About DAIC

What is an HPC cluster?

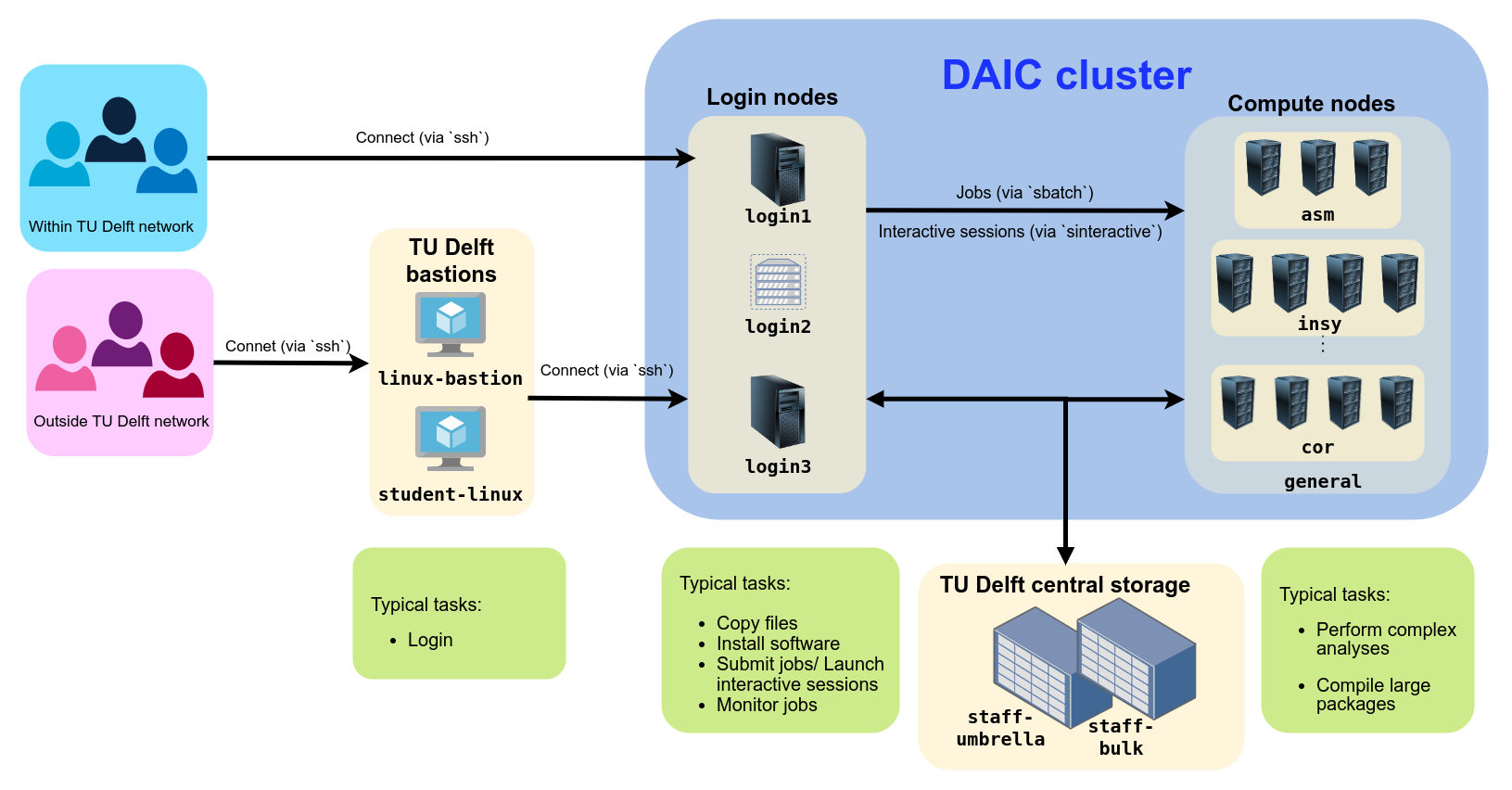

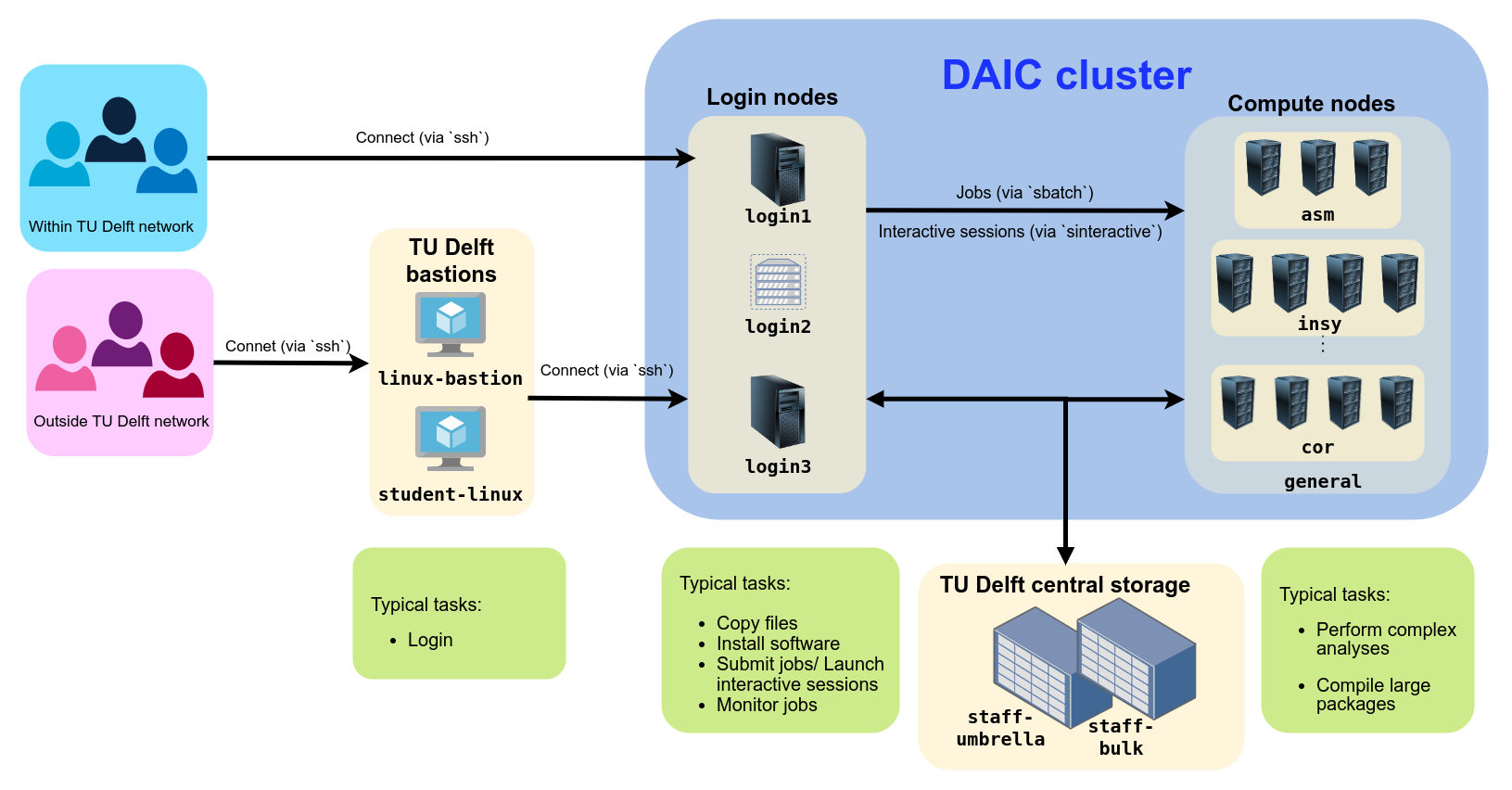

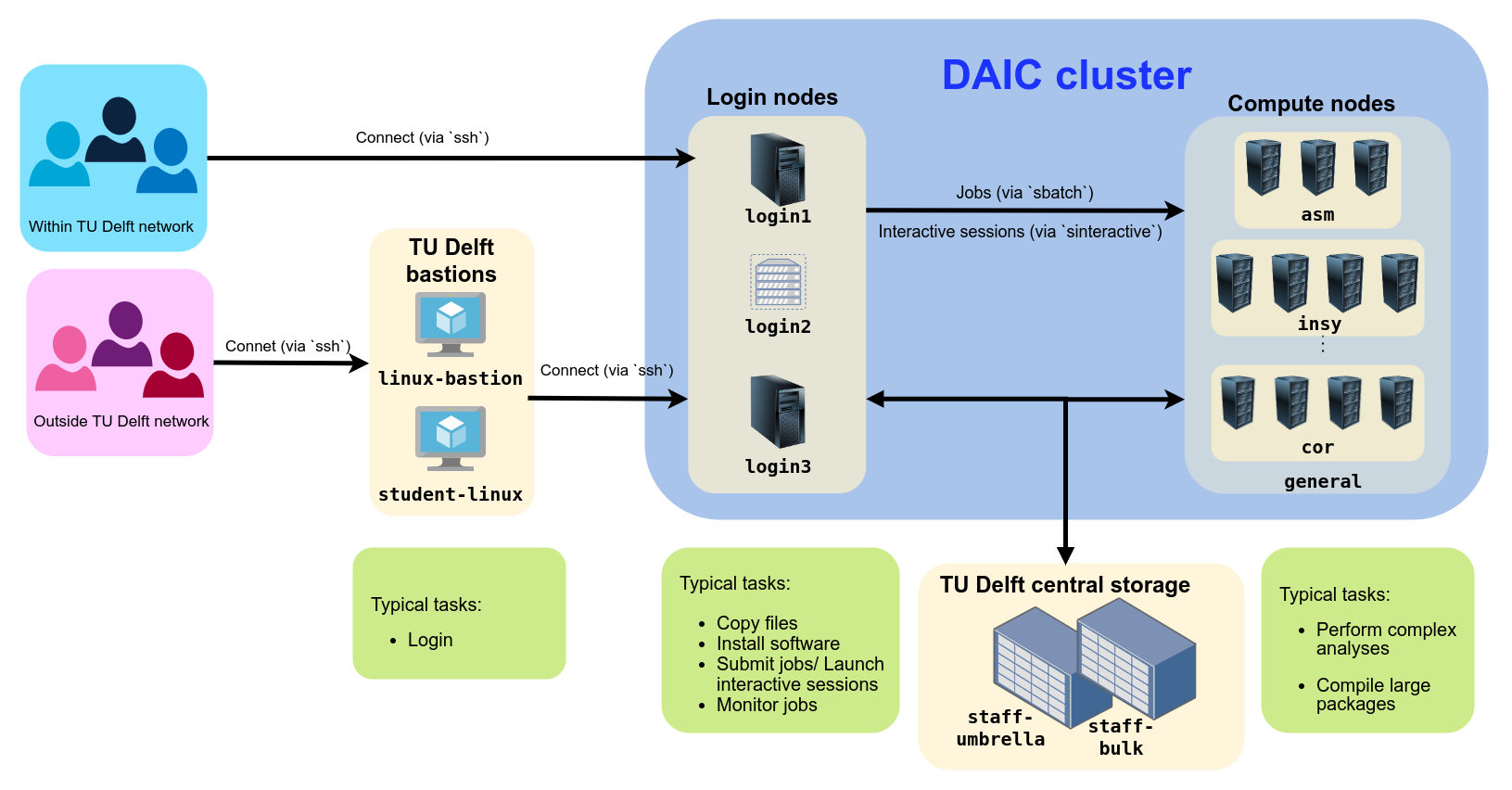

A high-performance computing (HPC) cluster is a collection of interconnected compute resources (like CPUs, GPUs, memory, and storage) shared among a group of users. These resources work together to perform lengthy and computationally intensive tasks that would be too large or too slow on a single computer. HPC is especially useful for modern scientific computing applications, where datasets are typically large, models are complex, and computations require specialized hardware (such as GPUs or FPGAs).

What is DAIC?

The Delft AI Cluster (DAIC), formerly known as INSY-HPC (or simply “HPC”), is a TU Delft high-performance computing cluster consisting of Linux compute nodes (i.e., servers) with substantial processing power and memory for running large, long, or GPU-enabled jobs.

What started in 2015 as a CS-only cluster has grown to serve researchers across many TU Delft departments. Each expansion has continued to support the needs of computer science and AI research. Today, DAIC nodes are organized into partitions that correspond to the groups contributing those resources. (See Contributing departments and TU Delft clusters comparison.)

DAIC partitions and access/usage best practices

1.1 - Contributors and funding

The Delft AI Cluster (DAIC)—formerly known as INSY-HPC or simply HPC—was initiated within the INSY department in 2015. In later phases, resources were merged with the ST department (collectively called CS@Delft) and expanded further with contributions from other departments across multiple faculties.

Joining DAIC?

If you are interested in joining DAIC as a contributor, please contact us via this TopDesk DAIC Contact Us form.Contributing departments

The cluster is available only to users from participating departments. Access is arranged through your department’s contact persons (see Access and accounts).

| I | Contributor | Faculty | Faculty abbreviation (English/Dutch) |

|---|---|---|---|

| 1 | Faculty of Architecture and the Built Environment | ABE/BK | |

| 2 | Architecture | ||

| 3 | Faculty of Aerospace Engineering | AE/LR | |

| 4 | Control and Operations | ||

| 5 | Imaging Physics | Faculty of Applied Sciences | AS/TNW |

| 6 | Faculty of Mechanical Engineering | ME | |

| 7 | Geoscience & Remote Sensing | Faculty of Civil Engineering and Geosciences | CEG/CiTG |

| 8 | Intelligent Systems | Faculty of Electrical Engineering, Mathematics & Computer Science | EEMCS/EWI |

| 9 | Software Technology | ||

| 10 | Signal Processing Systems, Microelectronics |

Note

To check the corresponding nodes or servers for each department, see the Cluster Specification page.Funding sources

In addition to funding from contributing departments, DAIC has received support from the following projects and funding sources:

Immersive Technology Lab, part of Convergence AI

1.2 - Advisors and Impact

Advisory board

Department of Intelligent Systems

Pattern Recognition and Bioinformatics group

Department of Intelligent Systems

Sequential Decision Making group

Software Technology Department

Data-intensive Systems group

Citation and Acknowledgement

To help demonstrate the impact of DAIC, we ask that you both cite and acknowledge DAIC in your scientific publications. Please use one of the following formats:

Delft AI Cluster (DAIC). (2024). The Delft AI Cluster (DAIC), RRID:SCR_025091. https://doi.org/10.4233/rrid:scr_025091

@misc{DAIC,

author = {{Delft AI Cluster (DAIC)}},

title = {The Delft AI Cluster (DAIC), RRID:SCR_025091},

year = {2024},

doi = {10.4233/rrid:scr_025091},

url = {https://doc.daic.tudelft.nl/}

}TY - DATA

T1 - The Delft AI Cluster (DAIC), RRID:SCR_025091

UR - https://doi.org/10.4233/rrid:scr_025091

PB - TU Delft

PY - 2024Research reported in this work was partially or completely facilitated by computational resources and support of the Delft AI Cluster (DAIC) at TU Delft (RRID: SCR_025091), but remains the sole responsibility of the authors, not the DAIC team.

Scientific impact in numbers

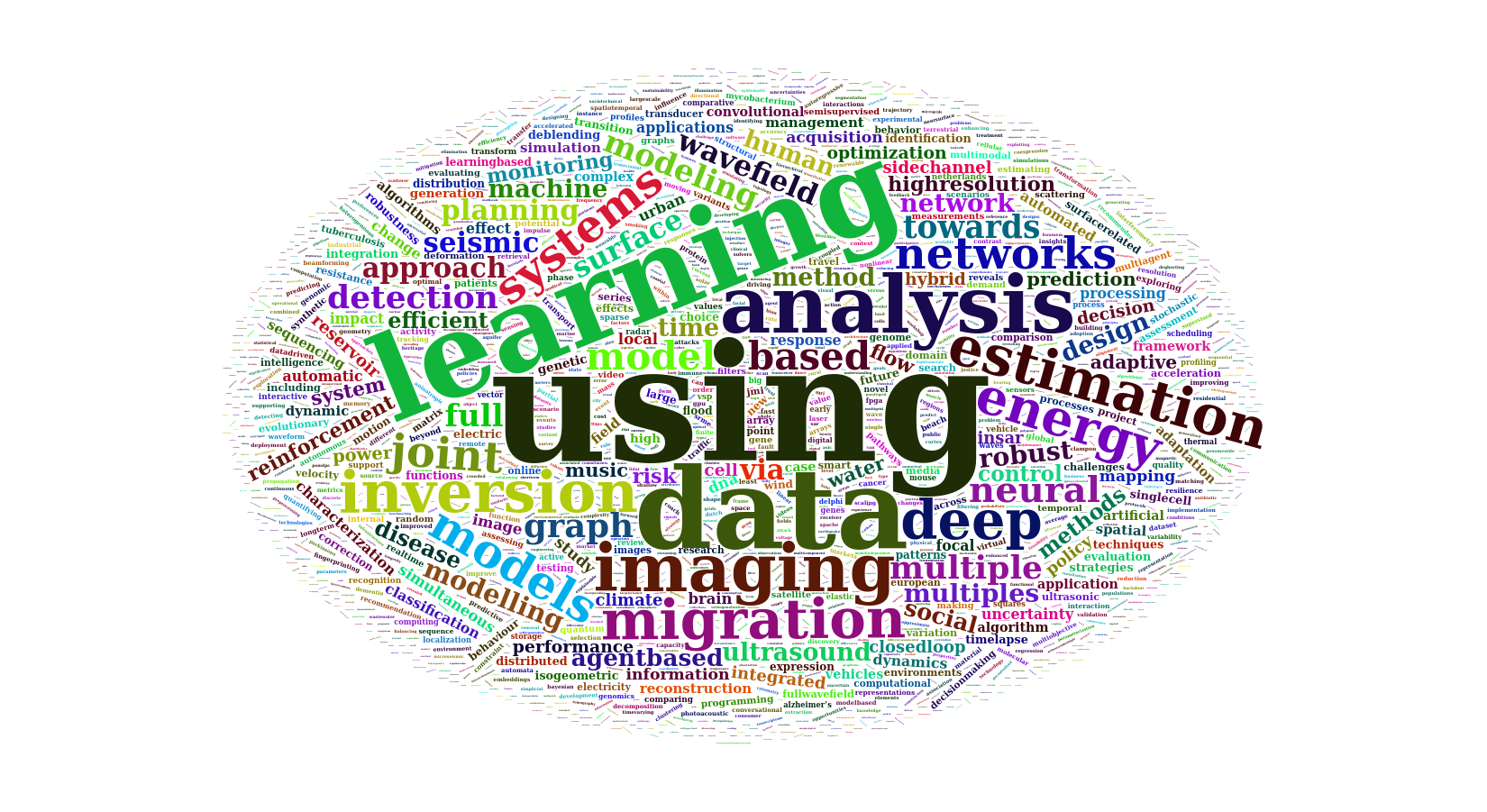

Since 2015, DAIC has facilitated more than 2,000 scientific outputs from participating departments:

| Article | Conference/Meeting contribution | Book/Book chapter/Book editing | Dissertation (TU Delft) | Abstract | Other | Editorial | Patent | Grand Total | |

|---|---|---|---|---|---|---|---|---|---|

| Grand Total | 1067 | 854 | 123 | 99 | 69 | 32 | 29 | 8 | 2281 |

These outputs span a wide range of research areas. Title analysis highlights frequent use of terms related to data analysis and machine learning:

Word cloud of the most common words in scientific output titles using DAIC

Reference

The table and word cloud are based on retrospective retrieval of scientific outputs (2015–2023) from TU Delft’s Pure database.Data was generated by the Strategic Development – Data Insights team.

Publications using DAIC

Note

The following list is compiled retrospectively by the Data Insights team and/or based on self-reported contributions. As a result, it may be incomplete. If your publication is missing, please share it via the ScientificOutput Mattermost channel.2 - Policies & Usage guidelines

User agreement

This user agreement is intended to establish the expectations between all users and administrators of the cluster with respect to fair-use and fair-share of cluster resources. By using the DAIC cluster you agree to these terms and conditions.

Defintions

- Cluster structure: The DAIC cluster is made up of shared resources contributed by different labs and groups. The pooling of resources from different groups is beneficial for everyone: it enables larger, parallelized computations and more efficient use of resources with less idle time.

- Basic principles: Regardless of the specific details, cluster use is always based on basic principles of fair-use and fair-share (through priority) of resources, and all users are expected to take care at all times that their cluster use is not hindering other users.

- Policies: Cluster policies are decided by the user board and enforced by various automated and non-automated actions, for example by the job scheduler based on QoS limits and the administrators for ensuring the stability and performance of the cluster.

- Support:

- Cluster administrators offer, during office hours, different levels of support, which include (in order of priority): ensuring the stability and performance of the cluster, providing generic software, helping with cluster-specific questions and problems, and providing information (via e-mails and during the board meeting) about cluster updates.

- Contact persons from participating groups add and manage users at the level of their respective groups, communicate needs and updates between their groups and system administrators, and may help with cluster-specific questions and problems.

- HPC Engineers, in CS@Delft, provide support to (CS) students, researchers and staff members to efficiently use DAIC resources. This includes: maintaining updated documentation resources, running onboarding and advanced training courses on cluster usage, organizing workshops to assess compute needs, plan infrastructure upgrades, and may collaborate with researchers on individual projects as fits.

- More information: Please see the Terms of service and What to do in case of problems sections on where to find more information about cluster use.

- Cluster workflow:

- The typical steps for running a job on the cluster are: Test → Determine resources → Submit → Monitor job → Repeat until results are obtained. See Quickstart

- You can use the logins nodes for testing your code, determining the required resources and submitting jobs (see Computing on login nodes).

- For testing jobs which require larger resources (more than 4 CPUs and/or more than 4 GB of memory and/or one or more powerful GPUs), start an interactive job (see Interactive jobs).

- For determining resources of larger jobs, you can submit a single (short) test job (see Submitting jobs)

- QoS:

- A Quality of Service (QoS) is a set of limits that controls what resources a job can use and determines the priority level of a job. DAIC adopts multiple QoSs to optimize the throughput of job scheduling and to reduce the waiting times in the cluster (see Quality of Service).

- The DAIC QoS limits are set by the DAIC user board, and the scheduler strictly enforces these limits. Thus, no user can use more resources than the amount that was set by the user board.

- Any (perceived) imbalance in the use of resources by a certain QoS or user should not be hold against a user or the scheduler, but should be discussed in the user board.

Access and accounts

- DAIC is a cluster dedicated for TU Delft researchers (eg, PhD students, postdocs, .. etc) from participating groups (see Contributing departments).

Needing access to DAIC?

To access DAIC resources, eligible candidates from these groups can request an account via the DAIC request Access form.- Additionally, requests for resources reservations can also be accommodated (see Terms of service).

Terms of service

- You may use cluster resources for your research within the QoS restrictions of your domain user and user group. Depending on your user group, you might be eligible to use specific partitions, giving higher priorities on certain nodes. See Priority tiers, and please check this with your lab.

- Depending on your user group, you might be eligible to get priorities on certain nodes. For example, you might have access to a specialized partition or limited-time node reservation for your group or department (for example before a conference deadline). Please check this with your lab and try to use these in your

*.sbatchfile, your jobs should then start faster! See Resources Reservations for more information. - In general, you will be informed about standard administrative actions on the cluster. All official DAIC cluster e-mails are sent to your official TU Delft mailbox, so it is advised to check it regularly.

- You will receive e-mails about downtimes relating to scheduled maintenance.

- You, or your supervisor, will receive e-mails about scheduled cluster user board meetings where any updates and changes to the cluster structure, software, or hardware will be announced. Please check with your lab or feel free to join the cluster board meetings if you want to be up-to-date about any changes.

- You will receive automated e-mails regarding the efficiency of your jobs. The cluster monitors the use of resources of all jobs. When certain specific inefficiencies are detected for a significant number of jobs in the same day, an automated efficiency mail is sent to inform you about these problems with your resource use, to help you optimize your jobs. These mails will not lead to automatic cancellations or bans. To avoid spamming, limited inefficient use will not trigger a mail.

- You will receive an e-mail when your jobs are canceled or you receive a cluster ban (see the Expectations from cluster users and Consequences of irresponsible usage sections). You will be informed about why your jobs were canceled or why you were banned from the cluster (often before the bans take place). If the problem is still not clear to you from the e-mails you already received, please follow the steps detailed in the What to do in case of problems section.

- You are not entitled to receive personalized help on how to debug your code via e-mail. It is your responsibility to solve technical problems stemming from your code. Please first consult with your lab for a solution to a technical problem (see What to do in case of problems). However, admins might offer help, advice and solutions along with information regarding a job cancellation or ban. Please listen to such advice, it might help you solve your problem and improve fair use of the cluster.

- You may join cluster user board meetings. In the meetings you will be informed of any new developments, hardware and software updates and can suggest changes and improvements. These meetings take place roughly every 3 months and will be announced by e-mail and on the MatterMost channel.

Expectations from cluster users

- You are responsible for your jobs not interfering with other users’ cluster usage. Please try to always keep in mind that cluster resources are limited and shared between all users, and that fair use benefits everyone.

- You are not allowed to use the cluster for reasons unrelated to your studies and research.

- If your jobs are destructive to other users’ jobs or are threatening cluster integrity, your jobs might be canceled. You have the responsibility at all times to avoid behavior which interferes negatively with other users’ cluster usage. See Consequences of irresponsible usage.

- If the destructive behavior of your jobs does not change over time or you are unresponsive to e-mails from system admins requesting information or requiring immediate action regarding your cluster use, you might receive a ban from the cluster. See Consequences of irresponsible usage.

- You are expected to cite and acknowledge DAIC in your scientific publications using the format specified in the Citation and Acknowledgement section. Additionally, please remember to post any scientific output based-off work performed on DAIC to the ScientificOutput MatterMost channel.

Responsible cluster usage

You are responsible that your jobs run efficiently:

- Please keep an eye on your jobs and the automated efficiency e-mails to check for unexpected behavior.

- Sometimes many jobs from the same user, or from student groups, will be running on many nodes at the same time. While this may seem like one user, or user group, is blocking the cluster for everyone else, please keep in mind that the scheduler operates on a set of predetermined rules based on the QoS and priority settings. We do not want idle resources. Therefore, at the time that those jobs were started, the resources were idle, no higher priority jobs were in the queue and the jobs did not exceed the QoS limits. If you repeatedly observe pending jobs, please bring it up in the user board meeting.

- Short job efficiency: If you are running many (hundreds or thousands of) very short jobs (duration of a few minutes), you may want to consider that starting and individually loading the same modules for each job may create overheads. When reasonably possible, it might save computation time to instead group some jobs together. The jobs can still be submitted to the

shortqueue if the runtime is less than 4 hours. - GPU job efficiency: If you are running multi-GPU jobs (for example due to GPU memory limitations), you may want to consider that the communications between the GPUs and other CPU processes (for example data loaders) may create overheads. It might be useful to consider running jobs on less GPUs with more GPU memory each, or taking advantage of specialized libraries optimized for multi-GPU computing in your code.

Consequences of irresponsible usage

- Your jobs might be canceled if:

- The node your jobs are running on becomes unresponsive and the node is automatically restarted.

- The job is overloading the node (for example overloading the network communication of the node).

- The job is adversely affecting the execution of other jobs (jobs that are not using all requested resources (effectively) and thus unfairly block waiting jobs from running may also be canceled).

- The jobs ignore the directions from the administrators (for example if a job is (still) affected by the same problem that the administrators informed you about before, and asked you to fix and test before resubmitting).

- The job is showing clear signs of a problem (like hanging, or being idle, or using only 1 CPU of the multiple CPUs requested, or not using a GPU that was requested).

- You might receive a cluster access ban for:

- Disallowed use of the cluster, including disallowed use of computing time, purposefully ignoring directions, guidelines, fair-use principles and/or (trying to gain) unauthorized access and/or causing disruptions to the cluster or parts thereof (even if unintentional).

- Unresponsiveness to e-mails from system admins requesting information or requiring immediate action regarding your cluster use.

- Repeated problems caused by your cluster use which go unsolved even after attempts to resolve the issue.

- Your cluster use privileges will be returned when all parties are confident that you understand the problem and it won’t reoccur.

- Your jobs won’t be canceled for:

- Scheduled maintenance. This is planned in advance and jobs that would run during scheduled maintenance times won’t start until the end of maintenance.

What to do in case of problems?

When you encounter problems, please follow the subsequent steps, in the indicated order:

- First, please contact your colleagues and fellow cluster users in your lab, concerning problems with your code, job performance and efficiency. They may be running similar jobs and potentially have solutions for your problem.

- You can also ask questions to fellow users on the MatterMost channel.

- For prolonged problems, your initial contact point is your supervisor/PI.

- As a final step, you can contact the cluster administrators for technical sysadmin problems or persistent efficiency problems, or for more information if you are not sure why you are banned from the cluster. You can do this by reporting your question, through the Self Service Portal , to the Service Desk. In your question, refer to the ‘DAIC cluster’.

- For severe recurring problems, complaints and suggestions for policy changes, or issues affecting multiple users, you can contact the DAIC advisory board to bring it up as an agenda point in the next user board meeting.

Usage guidelines

The available processing power and memory in DAIC is large, but still limited. You should use the available resources efficiently and fairly. This page lays out a few general principles and guidelines for considerate use of DAIC.

Using shared resources

The computing servers within DAIC are primarily meant to run large, long (non-interactive) jobs. You share these resources with other users across departments. Thus, you need to be cautious of your usage so you do not hinder other users.

To help protect the active jobs and resources, when a login server becomes overloaded, new logins to this server are automatically disabled. This means that you will sometimes have to wait for other jobs to finish and at other times ICT may have to kill a job to create space for other users.

One rule: Respect your fellow users.

Implication: we reserve the right to terminate any job or process that we feel is clearly interfering with the ability of others to complete work, regardless of technical measures or its resource usage.

Recommendations

- Always choose the login server with the lowest use (most importantly system load and memory usage), by checking the

Current resource usage page

or the

serverscommand for information.- Each server displays a message at login. Make sure you understand it before proceeding. This message includes the current load of the server, so look at it at every login

- Only use the storage best suited to your files (See Storage).

Do interactive code development, debugging and testing in your local machine, as much as possible. In the cluster, try to organize your code as scripts, instead of working interactively in the command line.

If you need to test and debug in the cluster, for example, in a GPU node, request an interactive session and do not work in the login node itself (See Interactive jobs on compute nodes).

Save results frequently: your job can crash, the server can become overloaded, or the network shares can become unavailable.

Write your code in a modular way, so that you can continue the job from the point where it last crashed.

- Actively monitor the status of your jobs and the loads of the servers.

- Make sure your job runs normally and is not hindering other jobs. Check the following at the start of a job and thereafter at least twice a day:

- If your job is not working correctly (or halted) because of a programming error, terminate it immediately; debug and fix the problem instead of just trying again (the result will almost certainly be exactly the same).

- If your

screen’s Kerberos ticket has expired, renew it so your job can successfully save it’s results. - Use the

topprogram to monitor the cpu (%CPU) and memory (%MEM) usage of your code. If either is too high, kill your code so it doesn’t cause problems for other users. - Don’t leave

toprunning unless your are continuously watching it; press q to quit. - Watch the current resource usage (see

Current resource usage page

or use the

serverscommand), and if the server is running close to it’s limits (higher than 90% server load or memory, swap or disk usage), consider moving your job to a less busy server.

- Make sure your job runs normally and is not hindering other jobs. Check the following at the start of a job and thereafter at least twice a day:

Computing on login nodes

You can use login nodes for basic tasks like compiling software, preparing submission scripts for the batch queue, submitting and monitoring jobs in the batch queue, analyzing results, and moving data or managing files.

Small-scale interactive work may be acceptable on login nodes if your resource requirements are minimal.

- Please do not run production research computations on the login nodes. If necessary, request an interactive session in these cases (See Interactive jobs on compute nodes)

Note

Most multi-threaded applications (such asJava and Matlab) will automatically use all cpu cores of a server, and thus take away processing power from other jobs. If you can specify the number of threads, set it to at most 25% (¼) of the cores in that server (for a server with 16 cores, use at most 4; this leaves enough processing capacity for other users). Also see How do I request CPUs for a multithreaded program?3 - System specifications

This section provides an overview of the Delft AI Cluster (DAIC) infrastructure and its comparison with other compute facilities at TU Delft.

DAIC partitions and access/usage best practices

3.1 - Login Nodes

Login nodes act as the gateway to the DAIC cluster. They are intended for lightweight tasks such as job submission, file transfers, and compiling code (on specific nodes). They are not designed for running resource-intensive jobs, which should be submitted to the compute nodes.

Specifications and usage notes

| Hostname | CPU (Sockets x Model) | Total Cores | Total RAM | Operating System | GPU Type | GPU Count | Usage Notes |

|---|---|---|---|---|---|---|---|

login1.daic.tudelft.nl | 1 x Intel(R) Xeon(R) CPU E5-2620 v4 @ 2.10GHz | 8 | 15.39 GB | OpenShift Enterprise | Quadro K2200 | 1 | For file transfers, job submission, and lightweight tasks. |

login2.daic.tudelft.nl | 1 x Intel(R) Xeon(R) CPU E5-2683 v3 @ 2.00GHz | 1 | 3.70 GB | OpenShift Enterprise | N/A | N/A | Virtual server, for non-intensive tasks. No compilation. |

login3.daic.tudelft.nl | 2 x Intel(R) Xeon(R) CPU E5-2683 v4 @ 2.10GHz | 32 | 503.60 GB | RHEV | Quadro K2200 | 1 | For large compilation and interactive sessions. |

Login1 resource limits (effective immediately)

Due to excessive background usage (especially from VSCode-related processes), per-user limits onlogin1 have been reduced to: 1 CPU, 1 GB RAM. This helps prevent system-wide instability.For compiling large code or running memory-heavy tasks, please use an interactive job: Interactive jobs on compute nodes

3.2 - Compute nodes

DAIC compute nodes are high-performance servers with multiple CPUs, large memory, and, on many nodes, one or more GPUs. The cluster is heterogeneous: nodes vary in processor types, memory sizes, GPU configurations, and performance characteristics.

If your application requires specific hardware features, you must request them explicitly in your job script (see Submitting jobs).

CPUs

All compute nodes have multiple CPUs (sockets), each with multiple cores. Most nodes support hyper-threading, which allows two threads per physical core. The number of cores per node is listed in the List of all nodes section.

Request CPUs based on how many threads your program can use. Oversubscribing doesn’t improve performance and may waste resources. Undersubscribing may slow your job due to thread contention.

To request CPUs for your jobs, see Job scripts.

GPUs

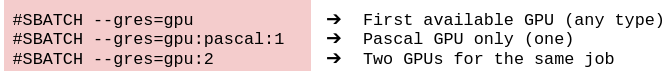

Many nodes in DAIC include one or more NVIDIA GPUs.GPU types differ in architecture, memory size, and compute capability. The table that follows summarizes the main GPU types in DAIC. For a per-node overview, see the List of all nodes section.

To request GPUs in your job, use

--gres=gpu:<type>:<count>. See GPU jobs for more information.

| GPU (slurm) type | Count | Model | Architecture | Compute Capability | CUDA cores | Memory |

|---|---|---|---|---|---|---|

| l40 | 18 | NVIDIA L40 | Ada Lovelace | 8.9 | 18176 | 49152 MiB |

| a40 | 84 | NVIDIA A40 | Ampere | 8.6 | 10752 | 46068 MiB |

| turing | 24 | NVIDIA GeForce RTX 2080 Ti | Turing | 7.5 | 4352 | 11264 MiB |

| v100 | 11 | Tesla V100-SXM2-32GB | Volta | 7.0 | 5120 | 32768 MiB |

In table 1: the headers denote:

- Model

- The official product name of the GPU

- Architecture

- The hardware design used in the GPU, which defines its specifications and performance characteristics. Each architecture (e.g., Ampere, Turing, Volta) represents a different GPU generation.

- Compute capability

- A version number indicating the features supported by the GPU, including CUDA support. Higher values offer more advanced functionality.

- CUDA cores

- The number of processing cores available on the GPU. More CUDA cores allow more parallel operations, improving performance for parallelizable workloads.

- Memory

- The total internal memory on the GPU. This memory is required to store data for GPU computations. If a model’s memory is insufficient, performance may be severely affected.

Memory

Each node has a fixed amount of RAM, shown in the List of all nodes section. Jobs may only use the memory explicitly requested using --mem or --mem-per-cpu. Exceeding the allocation may result in job failure.

Memory cannot be shared across nodes, and unused memory cannot be reallocated.

For memory-efficient jobs, consider tuning your requested memorey to match your code’s peak usage closely. Fore more information, see Slurm basics.

Note

All compute nodes support Advanced Vector Extensions 1 and 2 (AVX, AVX2), and use hyper-threading (ht), i.e, each physical core provides two logical CPUs. These are always allocated in pairs by the job scheduler (see Workload Scheduler).List of all nodes

The following table gives an overview of current nodes and their characteristics. Use the search bar to filter by hostname, GPU type, or any other column, and select columns to be visible.

Note

Slurm partitions typically correspond to research groups or departments that have contributed compute resources to the cluster. Most partition names follow the format <faculty>-<department> or <faculty>-<department>-<section>. A few exceptions exist for project-specific nodes.

For more information, see the Partitions section.

| Hostname | CPU (Sockets x Model) | Cores per Socket | Total Cores | CPU Speed (MHz) | Total RAM (GiB) | Local Disk (/tmp, GiB) | GPU Type | GPU Count | SlurmPartitions | SlurmActiveFeatures |

|---|---|---|---|---|---|---|---|---|---|---|

| 100plus | 2 x Intel(R) Xeon(R) CPU E5-2683 v4 @ 2.10GHz | 16 | 32 | 2097.594 | 755 | 3174 | N/A | 0 | general;ewi-insy | avx;avx2;ht;10gbe;bigmem |

| 3dgi1 | 1 x AMD EPYC 7502P 32-Core Processor | 32 | 32 | 2500.000 | 251 | 148 | N/A | 0 | general;bk-ur-uds | avx;avx2;ht;10gbe;ssd |

| 3dgi2 | 1 x AMD EPYC 7502P 32-Core Processor | 32 | 32 | 2500.000 | 251 | 148 | N/A | 0 | general;bk-ur-uds | avx;avx2;ht;10gbe;ssd |

| awi01 | 2 x Intel(R) Xeon(R) Gold 6140 CPU @ 2.30GHz | 18 | 36 | 3494.921 | 376 | 393 | Tesla V100-PCIE-32GB | 1 | general;tnw-imphys | avx;avx2;ht;10gbe;avx512;gpumem32;nvme;ssd |

| awi02 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 504 | 393 | Tesla V100-SXM2-16GB | 2 | general;tnw-imphys | avx;avx2;ht;10gbe;bigmem;ssd |

| awi04 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 503 | 5529 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;imphysexclusive |

| awi08 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 503 | 5529 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;imphysexclusive |

| awi09 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 503 | 5529 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;imphysexclusive |

| awi10 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 503 | 5529 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;imphysexclusive |

| awi11 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 503 | 5529 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;imphysexclusive |

| awi12 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 503 | 5529 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;imphysexclusive |

| awi19 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 251 | 856 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;ssd |

| awi20 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 251 | 856 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;ssd |

| awi21 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 251 | 856 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;ssd |

| awi22 | 2 x Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz | 14 | 28 | 2899.951 | 251 | 856 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;ssd |

| awi23 | 2 x Intel(R) Xeon(R) Gold 6140 CPU @ 2.30GHz | 18 | 36 | 2672.149 | 376 | 856 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;ssd |

| awi24 | 2 x Intel(R) Xeon(R) Gold 6140 CPU @ 2.30GHz | 18 | 36 | 3299.932 | 376 | 856 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;ssd |

| awi25 | 2 x Intel(R) Xeon(R) Gold 6140 CPU @ 2.30GHz | 18 | 36 | 3542.370 | 376 | 856 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;ssd |

| awi26 | 2 x Intel(R) Xeon(R) Gold 6140 CPU @ 2.30GHz | 18 | 36 | 2840.325 | 376 | 856 | N/A | 0 | general;tnw-imphys | avx;avx2;ht;ib;ssd |

| cor1 | 2 x Intel(R) Xeon(R) Gold 6242 CPU @ 2.80GHz | 16 | 32 | 3573.315 | 1510 | 7168 | Tesla V100-SXM2-32GB | 8 | general;me-cor | avx;avx2;ht;10gbe;avx512;gpumem32;ssd |

| gpu01 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu02 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu03 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu04 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu05 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu06 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu07 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu08 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu09 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;tnw-imphys | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu10 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;tnw-imphys | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu11 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | bk-ur-uds;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu12 | 2 x AMD EPYC 7413 24-Core Processor | 24 | 48 | 2650.000 | 503 | 415 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu14 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 503 | 856 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu15 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 503 | 856 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu16 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 503 | 856 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu17 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 503 | 856 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu18 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 503 | 856 | NVIDIA A40 | 3 | general;ewi-st | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu19 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 503 | 856 | NVIDIA A40 | 3 | general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu20 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 1007 | 856 | NVIDIA A40 | 3 | general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu21 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 1007 | 856 | NVIDIA A40 | 3 | general;ewi-insy-prb;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu22 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 1007 | 856 | NVIDIA A40 | 3 | general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu23 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 1007 | 856 | NVIDIA A40 | 3 | general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu24 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 1007 | 856 | NVIDIA A40 | 3 | general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu25 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 1007 | 856 | NVIDIA A40 | 3 | mmll;general;ewi-insy | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu26 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 1007 | 856 | NVIDIA A40 | 3 | lr-asm;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu27 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 503 | 856 | NVIDIA A40 | 3 | me-cor;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu28 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 503 | 856 | NVIDIA A40 | 3 | me-cor;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu29 | 2 x AMD EPYC 7543 32-Core Processor | 32 | 64 | 2800.000 | 503 | 856 | NVIDIA A40 | 3 | me-cor;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu30 | 1 x AMD EPYC 9534 64-Core Processor | 64 | 64 | 2450.000 | 755 | 856 | NVIDIA L40 | 3 | ewi-insy;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu31 | 1 x AMD EPYC 9534 64-Core Processor | 64 | 64 | 2450.000 | 755 | 856 | NVIDIA L40 | 3 | ewi-insy;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu32 | 1 x AMD EPYC 9534 64-Core Processor | 64 | 64 | 2450.000 | 755 | 856 | NVIDIA L40 | 3 | ewi-me-sps;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu33 | 1 x AMD EPYC 9534 64-Core Processor | 64 | 64 | 2450.000 | 755 | 856 | NVIDIA L40 | 3 | lr-co;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu34 | 1 x AMD EPYC 9534 64-Core Processor | 64 | 64 | 2450.000 | 755 | 856 | NVIDIA L40 | 3 | ewi-insy;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| gpu35 | 1 x AMD EPYC 9534 64-Core Processor | 64 | 64 | 2450.000 | 755 | 856 | NVIDIA L40 | 3 | bk-ar;general | avx;avx2;10gbe;bigmem;gpumem32;ssd |

| grs1 | 2 x Intel(R) Xeon(R) CPU E5-2667 v4 @ 3.20GHz | 8 | 16 | 3499.804 | 251 | 181 | N/A | 0 | citg-grs;general | avx;avx2;ht;ib;ssd |

| grs2 | 2 x Intel(R) Xeon(R) CPU E5-2667 v4 @ 3.20GHz | 8 | 16 | 3499.804 | 251 | 181 | N/A | 0 | citg-grs;general | avx;avx2;ht;ib;ssd |

| grs3 | 2 x Intel(R) Xeon(R) CPU E5-2667 v4 @ 3.20GHz | 8 | 16 | 3499.804 | 251 | 181 | N/A | 0 | citg-grs;general | avx;avx2;ht;ib;ssd |

| grs4 | 2 x Intel(R) Xeon(R) CPU E5-2667 v4 @ 3.20GHz | 8 | 16 | 3500 | 251 | 181 | N/A | 0 | citg-grs;general | avx;avx2;ht;ib;ssd |

| influ1 | 2 x Intel(R) Xeon(R) Gold 6130 CPU @ 2.10GHz | 16 | 32 | 3385.711 | 376 | 197 | NVIDIA GeForce RTX 2080 Ti | 8 | influence;ewi-insy;general | avx;avx2;ht;10gbe;avx512;nvme;ssd |

| influ2 | 2 x Intel(R) Xeon(R) Gold 5218 CPU @ 2.30GHz | 16 | 32 | 2300.000 | 187 | 369 | NVIDIA GeForce RTX 2080 Ti | 4 | influence;ewi-insy;general | avx;avx2;ht;10gbe;avx512;ssd |

| influ3 | 2 x Intel(R) Xeon(R) Gold 5218 CPU @ 2.30GHz | 16 | 32 | 2300.000 | 187 | 369 | NVIDIA GeForce RTX 2080 Ti | 4 | influence;ewi-insy;general | avx;avx2;ht;10gbe;avx512;ssd |

| influ4 | 2 x AMD EPYC 7452 32-Core Processor | 32 | 64 | 2350.000 | 252 | 148 | N/A | 0 | influence;ewi-insy;general | avx;avx2;ht;10gbe;ssd |

| influ5 | 2 x AMD EPYC 7452 32-Core Processor | 32 | 64 | 2350 | 503 | 148 | N/A | 0 | influence;ewi-insy;general | avx;avx2;ht;10gbe;bigmem;ssd |

| influ6 | 2 x AMD EPYC 7452 32-Core Processor | 32 | 64 | 2350 | 503 | 148 | N/A | 0 | influence;ewi-insy;general | avx;avx2;ht;10gbe;bigmem;ssd |

| insy15 | 2 x Intel(R) Xeon(R) Gold 5218 CPU @ 2.30GHz | 16 | 32 | 2300.000 | 754 | 416 | NVIDIA GeForce RTX 2080 Ti | 4 | ewi-insy;general | avx;avx2;ht;10gbe;avx512;bigmem;ssd |

| insy16 | 2 x Intel(R) Xeon(R) Gold 5218 CPU @ 2.30GHz | 16 | 32 | 2300.000 | 754 | 416 | NVIDIA GeForce RTX 2080 Ti | 4 | ewi-insy;general | avx;avx2;ht;10gbe;avx512;bigmem;ssd |

| Total (66 nodes) | 3016 cores | 35.02 TiB | 76.79 TiB | 137 GPU |

3.3 - Storage

Storage

DAIC compute nodes have direct access to the TU Delft home, group and project storage. You can use your TU Delft installed machine or an SCP or SFTP client to transfer files to and from these storage areas and others (see data transfer) , as is demonstrated throughout this page.

File System Overview

Unlike TU Delft’s

DelftBlue

, DAIC does not have a dedicated storage filesystem. This means no /scratch space for storing temporary files (see DelftBlue’s

Storage description

and

Disk quota and scratch space

). Instead, DAIC relies on direct connection to the TU Delft network storage filesystem (see

Overview data storage

) from all its nodes, and offers the following types of storage areas:

Personal storage (aka home folder)

The Personal Storage is private and is meant to store personal files (program settings, bookmarks). A backup service protects your home files from both hardware failures and user error (you can restore previous versions of files from up to two weeks ago). The available space is limited by a quota (see Quotas) and is not intended for storing research data.

You have two (separate) home folders: one for Linux and one for Windows (because Linux and Windows store program settings differently). You can access these home folders from a machine (running Linux or Windows OS) using a command line interface or a browser via

TU Delft's webdata

. For example, Windows home has a My Documents folder. My documents can be found on a Linux machine under /winhome/<YourNetID>/My Documents

| Home directory | Access from | Storage location |

|---|---|---|

| Linux home folder | ||

| Linux | /home/nfs/<YourNetID> | |

| Windows | only accessible using an scp/sftp client (see SSH access) | |

| webdata | not available | |

| Windows home folder | ||

| Linux | /winhome/<YourNetID> | |

| Windows | H: or \\tudelft.net\staff-homes\[a-z]\<YourNetID> | |

| webdata | https://webdata.tudelft.nl/staff-homes/[a-z]/<YourNetID> | |

It’s possible to access the backups yourself. In Linux the backups are located under the (hidden, read-only) ~/.snapshot/ folder. In Windows you can right-click the H: drive and choose Restore previous versions.

Note

To see your disk usage, run something like:

du -h '</path/to/folder>' | sort -h | tail

Group storage

The Group Storage is meant to share files (documents, educational and research data) with department/group members. The whole department or group has access to this storage, so this is not for confidential or project data. There is a backup service to protect the files, with previous versions up to two weeks ago. There is a Fair-Use policy for the used space.

| Destination | Access from | Storage location |

|---|---|---|

| Group Storage | ||

| Linux | /tudelft.net/staff-groups/<faculty>/<department>/<group> or | |

/tudelft.net/staff-bulk/<faculty>/<department>/<group>/<NetID> | ||

| Windows | M: or \\tudelft.net\staff-groups\<faculty>\<department>\<group> or | |

L: or \\tudelft.net\staff-bulk\ewi\insy\<group>\<NetID> | ||

| webdata | https://webdata.tudelft.nl/staff-groups/<faculty>/<department>/<group>/ | |

Project Storage

The Project Storage is meant for storing (research) data (datasets, generated results, download files and programs, …) for projects. Only the project members (including external persons) can access the data, so this is suitable for confidential data (but you may want to use encryption for highly sensitive confidential data). There is a backup service and a Fair-Use policy for the used space.

Project leaders (or supervisors) can request a Project Storage location via the Self-Service Portal or the Service Desk .

| Destination | Access from | Storage location |

|---|---|---|

| Project Storage | ||

| Linux | /tudelft.net/staff-umbrella/<project> | |

| Windows | U: or \\tudelft.net\staff-umbrella\<project> | |

| webdata | https://webdata.tudelft.nl/staff-umbrella/<project> or | |

Tip

Data deleted from project storage,staff-umbrella, remains in a hidden .snapshot folder. If accidently deleted, you can recover such data by copying it from the (hidden).snapshot folder in your storage.Local Storage

Local storage is meant for temporary storage of (large amounts of) data with fast access on a single computer. You can create your own personal folder inside the local storage. Unlike the network storage above, local storage is only accessible on that computer, not on other computers or through network file servers or webdata. There is no backup service nor quota. The available space is large but fixed, so leave enough space for other users. Files under /tmp that have not been accessed for 10 days are automatically removed. A process that has a file opened can access the data until the file is closed, even when the file is deleted. When the file is deleted, the file entry will be removed but the data will not be removed until the file is closed. Therefore, files that are kept open by a process can be used for longer. Additionally, files that are being accessed (read, written) multiple times within one day won’t be deleted.

| Destination | Access from | Storage location |

|---|---|---|

| Local storage | ||

| Linux | /tmp/<NetID> | |

| Windows | not available | |

| webdata | not available | |

Memory Storage

Warning

Using /dev/shm is very risky, and should only be done when you understand all implications. Consider using the local storage (/tmp) as a safer alternative.

Cluster-wide Risk: When memory storage fills up, it can cause memory exhaustion that kills running jobs. The scheduler cannot identify the cause, so it continues launching new jobs that will also fail, potentially making the whole cluster unusable.

Clean up policy: Users must always clean up ‘/dev/shm’ after using it, even when jobs fail or are stopped via the scheduler.

Memory storage is meant for short-term storage of limited amounts of data with very fast access on a single computer. You can create your own personal folder inside the memory storage location. Memory storage is only accessible on that computer, and there is no backup service nor quota. The available space is limited and shared with programs, so leave enough space (the computer will likely crash when you don’t!). Files that have not been accessed for 1 day are automatically removed.

| Destination | Access from | Storage location |

|---|---|---|

| Memory storage | ||

| Linux | /dev/shm/<NetID> | |

| Windows | not available | |

| webdata | not available | |

Info

Use this only when using other storage makes your job or the whole computer slow. Files in/dev/shm/ use system memory directly and do not count toward your job’s memory allocation. Request enough memory to cover both your job’s processing needs and any files stored in memory storage. Never exceed your allocated memory, not even for one second.Checking quota limits

The different storage areas accessible on DAIC have quotas (or usage limits). It’s important to regularly check your usage to avoid job failures and ensure smooth workflows.

Helpful commands

- For

/home:

$ quota -s -f ~

Disk quotas for user netid (uid 000000):

Filesystem space quota limit grace files quota limit grace

svm111.storage.tudelft.net:/staff_homes_linux/n/netid

12872M 24576M 30720M 19671 4295m 4295m

- For project space: You can use either:

$ du -hs /tudelft.net/staff-umbrella/my-cool-project

37G /tudelft.net/staff-umbrella/my-cool-project

Or:

$ df -h /tudelft.net/staff-umbrella/my-cool-project

Filesystem Size Used Avail Use% Mounted on

svm107.storage.tudelft.net:/staff_umbrella_my-cool-project 1,0T 38G 987G 4% /tudelft.net/staff-umbrella/my-cool-project

Note that the difference is due to snapshots, which can stay for up to 2 weeks

3.4 - Scheduler

Workload scheduler

DAIC uses the Slurm scheduler to efficiently manage workloads. All jobs for the cluster have to be submitted as batch jobs into a queue. The scheduler then manages and prioritizes the jobs in the queue, allocates resources (CPUs, memory) for the jobs, executes the jobs and enforces the resource allocations. See the job submission pages for more information.

A slurm-based cluster is composed of a set of login nodes that are used to access the cluster and submit computational jobs. A central manager orchestrates computational demands across a set of compute nodes. These nodes are organized logically into groups called partitions, that defines job limits or access rights. The central manager provides fault-tolerant hierarchical communications, to ensure optimal and fair use of available compute resources to eligible users, and make it easier to run and schedule complex jobs across compute resources (multiple nodes).

3.5 - Cluster comparison

Cluster comparison

TL;DR

- Most AI training → DAIC.

- Many CPUs / high-memory or MPI jobs → DelftBlue.

- Distributed/experimental systems work → DAS-6.

- Bigger than local capacity or cross-institutional projects → Snellius (via SURF).

- Euro-scale GPU runs → LUMI (EuroHPC via SURF).

- Exascale runs → Jupiter (EuroHPC)

TU Delft clusters

DAIC is one of several clusters accessible to TU Delft CS researchers (and their collaborators). The table below gives a comparison between these in terms of use case, eligible users, and other characteristics.

Tip

- When in doubt, start on DAIC for prototyping. If you hit limits (time-to-solution, memory, scale), graduate to DelftBlue, then Snellius/LUMI.

- TU Delft has other clusters that are not listed here. These tend to be more specialized or have different access requirements.

| System | Best for | Strengths | Use it when | Access & Support |

|---|---|---|---|---|

🎓  | AI/ML training; data-centric workflows; GPU‑intensive workloads | Large NVIDIA GPU pool (L40, A40, RTX 2080 Ti, V100 SXM2); local expert support (REIT and ICT); direct TU Delft storage | Quick iteration, hyper‑parameter sweeps, demos, and almost any workload from participating groups; queues are generally shorter than DelftBlue but limited by available GPUs | Access • Specs • Community |

🎓  | CPU/MPI jobs; high‑memory runs; large per-GPU memory needed; education | Large CPU pool; larger Nvidia GPUs (A100); dedicated scratch storage; local expert support (DHPC, ICT) | Many cores, tightly‑coupled MPI, long CPU jobs, or very high memory per node; education | Access • Specs • Community |

🎓  | Distributed systems research; streaming; edge/fog computing; in-network processing | Multi‑site testbed; mix of GPUs (16× A4000, 4× A5000) and CPUs | Cross‑cluster experiments, network‑sensitive prototypes | Access • Docs • Project |

🇳🇱  | National‑scale runs; larger GPU pools; cross‑institutional projects | Large CPU+GPU partitions (A100 and H100); mature SURF user support; common NL platform | When local capacity/queue limits progress or when collaborating with other Dutch institutions | Access • Docs • Specs |

🇪🇺  | Euro‑scale AI/data; very large GPU jobs; benchmarking at scale | Tier‑0 system with AMD MI250 GPUs (LUMI‑G); high‑performance I/O; strong EuroHPC ecosystem | Beyond Snellius capacity or part of a funded EU consortium / EuroHPC allocation | Access • Docs |

Other EuroHPC resources

In addition to LUMI, TU Delft researchers can also apply for access to other EuroHPC Tier-0/1 systems through EuroHPC Joint Undertaking calls. Examples include:

- Jupiter (Germany): Europe’s first exascale supercomputer, targeting the most demanding HPC and AI workloads.

- Leonardo (Italy): Pre-exascale system with hybrid architecture: a large GPU partition (Nvidia) for AI and a CPU partition for HPC simulations.

- MareNostrum 5 (Spain): Pre-exascale general-purpose system, with Nvidia GPUs.

- MeluXina (Luxembourg): Petascale modular system, suitable for AI, digital twins, quantum simulation, and traditional computational workloads.

- Karolina (Czech Republic): Petascale system for HPC, AI, and Big Data applications.

- Discoverer (Bulgaria): Petascale system focused on simulations and modelling.

These systems complement LUMI and broaden the options for AI, simulation, and large-scale scientific workflows at the European level.

TU Delft cloud resources

For both education and research activities, TU Delft has established the Cloud4Research program. Cloud4Research aims to facilite the use of public cloud resources, primarily Amazon AWS. At the administrative level, Cloud4Research provides AWS accounts with an initial budget. Subsequent billing can be incurred via a project code, instead of a personal credit card. At the technical level, the ICT innovation teams provides intake meetings to facilitate getting started. Please refer to the Policies and FAQ pages for more details.

Strategic opportunities

Are you planning infrastructure proposals or strategic partnerships? Contact us to discuss collaborative opportunities via this TopDesk DAIC Contact Us form4 - User manual

4.1 - Connecting to DAIC

SSH access

If you have a valid DAIC account (see Access and accounts), you can access DAIC resources using an SSH client. SSH (Secure SHell) is a protocol that allows you to connect to a remote computer via a secure network connection. SSH supports remote command-line login and remote command execution. SCP (Secure CoPy) and SFTP (Secure File Transfer Protocol) are file transfer protocols based on SSH (see wikipedia's ssh page ).

SSH clients

Most modern operating systems like Linux, macOS, and Windows 10 include SSH, SCP, and SFTP clients (part of the OpenSSH package) by default. If not, you can install third-party programs like:

MobaXterm , PuTTY page , or FileZilla .

Connecting to DAIC from inside and outside TU Delft network

Access from the TU Delft Network

To connect to DAIC within TU Delft network (ie, via eduram or wired connection), open a command-line interface (prompt, or terminal, see Wikipedia's CLI page ), and run the following command:

$ ssh <YourNetID>@login.daic.tudelft.nl # Or

$ ssh login.daic.tudelft.nl # If your username matches your NetID

<YourNetID>is your TU Delft NetID. If the username on your machine you are connecting from matches your NetID, you can omit the square brackets and their contents,[<YourNetID>@].

This will log you in into DAIC’s login1.daic.tudelft.nl node for now. Note that this setup might change in the future as the system undergoes migration, potentially reducing the number of login nodes..

Note

Currently DAIC has 3 login nodes:login1.daic.tudelft.nl, login2.daic.tudelft.nl, and login3.daic.tudelft.nl. You can connect to any of these nodes directly as per your needs. For more on the choice of login nodes, see DAIC login nodes.Note

Upon first connection to an SSH server, you will be prompted to confirm the server’s identity, with a message similar to:

The authenticity of host 'login.daic.tudelft.nl (131.180.183.244)' can't be established.

ED25519 key fingerprint is SHA256:MURg8IQL8oG5o2KsUwx1nXXgCJmDwHbttCJ9ljC9bFM.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'login.daic.tudelft.nl' (ED25519) to the list of known hosts.

A distinct fingerprint will be shown for each login node, as below:

SHA256:MURg8IQL8oG5o2KsUwx1nXXgCJmDwHbttCJ9ljC9bFMSHA256:MURg8IQL8oG5o2KsUwx1nXXgCJmDwHbttCJ9ljC9bFMSHA256:O3AjQQjCfcrwJQ4Ix4dyGaUoYiIv/U+isMT5+sfeA5QOnce identity confirmed, enter your password when prompted (nothing will be printed as you type your password):

The HPC cluster is restricted to authorized users only.

YourNetID@login.daic.tudelft.nl's password:

Next, a welcome message will be shown:

Last login: Mon Jul 24 18:36:23 2023 from tud262823.ws.tudelft.net

#########################################################################

# #

# Welcome to login1, login server of the HPC cluster. #

# #

# By using this cluster you agree to the terms and conditions. #

# #

# For information about using the HPC cluster, see: #

# https://login.hpc.tudelft.nl/ #

# #

# The bulk, group and project shares are available under /tudelft.net/, #

# your windows home share is available under /winhome/$USER/. #

# #

#########################################################################

18:40:16 up 51 days, 6:53, 9 users, load average: 0,82, 0,36, 0,53

And, now you can now verify your environment with basic commands:

YourNetID@login1:~$ hostname # show the current hostname

login1.hpc.tudelft.nl

YourNetID@login1:~$ echo $HOME # show the path to your home directory

/home/nfs/YourNetID

YourNetID@login1:~$ pwd # show current path

/home/nfs/YourNetID

YourNetID@login1:~$ exit # exit current connection

logout

Connection to login.daic.tudelft.nl closed.

In this example, the user, YourNetID, is logged in via the login node login1.hpc.tudelft.nl as can be seen from the hostname output. The user has landed in the $HOME directory, as can be seen by printing its value, and checked by the pwd command. Finally, the exit command is used to exit the cluster.

Graphical applications

We discourage running graphical applications (viassh -X) on DAIC login nodes, as GUI applications are not supported on the HPC systems.Access from outside university network using a VPN

Direct access to DAIC from outside the university network is blocked by a firewall. Therefore, a VPN is needed

You need to use TU Delft’s EduVPN or OpenVPN (See TU Delft’s Access via VPN recommendations ) to access DAIC directly. Once connected to the VPN, you can ssh to DAIC directly, as in Access from the TU Delft Network.

VPN access trouble?

If you are having trouble accessing DAIC via the VPN, please report an issue via this Self-Service link.Using the Linux Bastion Server

As of January 16th 2025, access to TU Delft bastion hosts is only possible via a VPN. Therefore, it is now recommended to activate a TU Delft VPN and directly connect to DAIC (instead of jumping via the bastion host).Simplifying SSH with Configuration Files

To simplify SSH connections, you can store configurations in a file in your local machine. The SSH configuration file can be created (or found, if already exists) in ~/.ssh/config on Linux/Mac systems, or in C:\Users\<YourUserName>\.ssh on Windows.

For example, on a Linux system, you can have the following lines in the configuration file:

~/.ssh/config

Host daic

HostName login.daic.tudelft.nl # Or any other login node

User <YourNetID>

where:

- The

Hostkeyword starts the SSH configuration block and specifies the name (or pattern of names, likedaicin this example) to which the configuration entries will apply.- The

HostNameis the actual hostname to log into. Numeric IP addresses are also permitted (both on the command line and in HostName specifications).- The

Useris the login username. This is especially important when the username differs between your machine and the remote server/cluster.

You can then connect to DAIC from inside TU Delft network (or behind TU Delft VPN) by just typing the following command:

$ ssh daic

Efficient SSH Connections with SSH Multiplexing

SSH multiplexing allows you to reuse an existing connection for multiple SSH sessions, reducing the time spent entering your password for every new connection. After the first connection is established, subsequent connections will be much faster since the existing control connection is reused.

To enable SSH multiplexing, add the following lines to your SSH configuration file. Assuming a Linux/Mac system, you can add the following lines to ~/.ssh/config:

~/.ssh/config

Host *

ControlMaster auto

ControlPath /tmp/ssh-%r@%h:%p

where:

- The

ControlPathspecifies where to store the “control socket” for the multiplexed connections.%rrefers to the remote login name,%hrefers to the target host name, and%prefers to the destination port. This ensures that SSH separates control sockets for different connections.- The

ControlMastersetting activates multiplexing. With theautosetting, SSH will use an existing master connection if available or create a new one when necessary. This configuration helps streamline SSH connections and reduces the need to enter your password for each new session.

This setup will speed up connections after the first one and reduce the need to repeatedly enter your password for each new SSH session.

Note

On Windows you may need to adjust theControlPath to match a valid path for your operating system. For example, instead of /tmp/, you might use a path like C:/Users/<YourUserName>/AppData/Local/Temp/.Important

SSH public key logins (passwordless login) are not supported on DAIC, because Kerberos authentication is required to access your home directory. You will need to enter your password for each session4.2 - Data management & transfer

Data Management Guidelines

DAIC login and compute nodes have direct access to standard TU Delft network storage, including your personal home folder, group storage, and project storage. It is important to use the correct storage location for your data, as each has different use cases, access rights, and quota limits.

For example, Project Storage (staff-umbrella) is the recommended location for research data, datasets, and code. In contrast, staff-bulk is a legacy storage area that is being phased out. For a complete overview of storage types, official guidelines, and quota limits, always consult the TU Delft

Overview data storage

.

This page explains the best methods for transferring data to and from these storage locations.

Recommended Workflow: Direct Data Download

The most efficient way to download large datasets from external sources (e.g. collaborators or public repositories) is to transfer them directly from your local computer to your TU Delft project storage. This avoids using the DAIC login and compute nodes, which are optimized for computation, not large data transfers, and avoids unnecessary load on the internal network.

Note

Do not connect to DAIC using sshfs! That would only (over)load the network connection to the login nodes, which would affect the interactive work of other users. Instead, download data directly to your project storage as described below.Follow these steps to download data directly to your project storage (and access it from DAIC):

1. Access your DAIC storage from your local computer

You can either mount the storage as a network drive or use an SFTP client. Mounting is often more convenient as it makes the remote storage appear like a local folder. Choose the appropriate method for your operating system:

For TU Delft-managed computers:

- Project Data Storage is mounted automatically under

This PCasProject Data (U:)or\\tudelft.net\staff-umbrella.

For personal computers:

- Connect to EduVPN first.

- Install WebDrive and connect to

sftp.tudelft.nl. Click onstaff-umbrella(this is the Project Data Storage).

Option 1: Using Finder

- Press

⌘Kor choose Go > Connect to Server. - Enter:

smb://tudelft.net/staff-umbrella/<your_project_name>and clickConnect. - (Optional) Add this address to your Favorite Servers for easy access later.

Option 2: Using an SFTP client (e.g., Terminal, FileZilla, CyberDuck)

Connect to sftp.tudelft.nl with your NetID and password. From the terminal, you can use:

sftp <YourNetID>@sftp.tudelft.nl

cd staff-umbrella/<your_project_name>

put data.zip # Upload a file (data.zip) to your storage

get results.zip # Download a file (results.zip) from your storage

Graphical clients like FileZilla or CyberDuck provide a drag-and-drop interface for the same purpose.

For TU Delft-managed computers:

- For managed Ubuntu 22.04, contact ICT for setting up the mount.

- For Ubuntu 18.04, storage is mounted under

/tudelft.net/staff-umbrella/:- You can access it via the terminal:

cd /tudelft.net/staff-umbrella/<your_project_name> - Or via the file manager (nautilus or dolphin):

under Other locations > Computer > tudelft.net > staff-umbrella > <your_project_name>

- You can access it via the terminal:

For personal computers: Option 1: Mount with sshfs

mkdir ~/storage_mount

sshfs YourNetID@sftp.tudelft.nl:/staff-umbrella/<your_project_name> ~/storage_mount

ls ~/storage_mount # Check contents of your project storage

And, after you are done with the mount:

fusermount -u ~/storage_mount

Option 2: Use sftp

sftp <YourNetID>@sftp.tudelft.nl

cd staff-umbrella/<your_project_name>

put data.zip # Upload a file (data.zip) to your storage

get results.zip # Download a file (results.zip) from your storage

2. Download the data directly to the storage location

Once you have mounted or connected to your storage, you can use standard tools like wget, curl, or your web browser to download files directly into that location.

For example, if you mounted your storage on Linux at ~/storage_mount, you can download a large dataset into your project folder with wget:

wget -P ~/storage_mount/datasets/ https://www.robots.ox.ac.uk/~vgg/data/flowers/102/102flowers.tgz

The file (the Oxford Flowers 102 Dataset in this example) downloads directly to your project folder in the staff-umbrella storage, using your local machine’s network connection.

Command-Line Transfer Tools

Both your Linux and Windows Personal Storage and the Project and Group Storage are also available world-wide via an SCP/SFTP client.

For direct transfers between your local machine and DAIC, or for scripting automated workflows, you can use command-line tools like scp and rsync.

SCP (Secure Copy)

The scp command provides a simple way to copy files over a secure channel. It has the following basic syntax:

$ scp <source_file> <target_destination> # for files

$ scp -r <source_folder> <target_destination> # for folders

For example, to transfer a file from your computer to DAIC:

$ scp mylocalfile [<YourNetID>@]login.daic.tudelft.nl:~/destination_path_on_DAIC/

To transfer a folder (recursively) from your computer to DAIC:

$ scp -r mylocalfolder [<YourNetID>@]login.daic.tudelft.nl:~/destination_path_on_DAIC/

To transfer a file from DAIC to your computer:

$ scp [<YourNetID>@]login.daic.tudelft.nl:~/origin_path_on_DAIC/remotefile ./

To transfer a folder from DAIC to your computer:

$ scp -r [<YourNetID>@]login.daic.tudelft.nl:~/origin_path_on_DAIC/remotefolder ./

The above commands work from both the university network, or when using EduVPN. If a “jump” via linux-bastion is needed (see Access from outside university network), modify the above commands by replacing scp with scp -J <YourNetID>@linux-bastion.tudelft.nl and keep the rest of the command as before:

# Transfer a local file to DAIC via the bastion host

$ scp -J [<YourNetID>@]linux-bastion.tudelft.nl <localfile> [<YourNetID>@]login.daic.tudelft.nl:/tudelft.net/staff-umbrella/<your_project_name>/

# Transfer a remote file from DAIC to your local machine via the bastion host

$ scp -J [<YourNetID>@]linux-bastion.tudelft.nl [<YourNetID>@]login.daic.tudelft.nl:/tudelft.net/staff-umbrella/<your_project_name>/<remotefile> ./

Where:

- Case is important.

- Items between < > brackets are user-supplied values (so replace with your own NetID, file or folder name).

- Items between [ ] brackets are optional: when your username on your local computer is the same as your NetID username, you don’t have to specify it.

- When you specify your NetID username, don’t forget the @ character between the username and the computer name.

Note for students

Please usestudent-linux.tudelft.nl instead of linux-bastion.tudelft.nl as an intermediate server!Hint

Use quotes when file or folder names contain spaces or special characters.rsync

rsync is a robust file copying and synchronization tool commonly used in Unix-like operating systems. It allows you to transfer files and directories efficiently, both locally and remotely. rsync supports options that enable compression, preserve file attributes, and allow for incremental updates.

Basic Usage

Copy files locally:

rsync [options] <source> <destination>This command copies files and directories from the

sourceto thedestination.Copy files remotely:

rsync [options] <source> <user>@<remote_host>:<destination>This command transfers files from a local

sourceto adestinationon a remote host.

Note

When sending data to staff-umbrella or staff-bulk, you must use the --no-perms option to avoid errors, as the underlying network filesystem does not support changing permissions.

A recommended command to use is:

$ rsync --progress -avz --no-perms <source_file> [<YourNetID>@]login.daic.tudelft.nl:<destination_umbrella_directory>

This command is effective because:

--progressshows the transfer progress.-a(archive mode) efficiently copies directories and preserves file attributes like timestamps.-vprovides verbose output.-zcompresses data to speed up the transfer.--no-permsprevents errors related to file permissions on the destination.

Examples

Synchronize a local directory with a remote directory:

rsync -avz /path/to/local/dir user@remote_host:/path/to/remote/dirThis synchronizes a local directory with a remote directory, using archive mode (

-a) to preserve file attributes, verbose mode (-v) for detailed output, and compression (-z) for efficient transfer.Synchronize a remote directory with a local directory:

rsync -avz user@remote_host:/path/to/remote/dir /path/to/local/dirThis transfers files from a remote directory to a local directory, using the same options as the previous example.

Delete files in the destination that are not present in the source:

rsync -av --delete /path/to/source/dir /path/to/destination/dirThis synchronizes the source and destination directories and deletes files in the destination that are not in the source.

Exclude certain files or directories during transfer:

rsync -av --exclude='*.tmp' /path/to/source/dir /path/to/destination/dirThis synchronizes the source and destination directories, excluding files with the

.tmpextension.

Other Options in rsync

In addition to the commonly used options, rsync provides several other options for more advanced control and customization during file transfers:

--dry-run: Perform a trial run without making any changes. This option allows you to see what would be done without actually doing it.--checksum: Use checksums instead of file size and modification time to determine if files should be transferred. This is more precise but slower.--partial: Keep partially transferred files and resume them later. This is useful in case of an interrupted transfer.--partial-dir=DIR: Specify a directory to hold partial transfers. This option works well with--partial.--bwlimit=KBPS: Limit the bandwidth used by the transfer to the specified rate in kilobytes per second. Useful for managing network load.--timeout=SECONDS: Set a maximum wait time in seconds for receiving data. If the timeout is exceeded,rsyncwill exit.--no-implied-dirs: When transferring a directory, this option prevents the creation of implied directories on the destination side that exist in the source but not explicitly specified in the transfer.--files-from=FILE: Read a list of source files from the specified FILE. This can be useful when you want to transfer specific files.--update: Skip files that are newer on the destination than the source. This is useful for incremental backups.--ignore-existing: Skip files that already exist on the destination. Useful when you want to avoid overwriting existing files.--inplace: Update files in place instead of creating temporary files and renaming them later. This can save disk space and improve speed.--append: Append data to files instead of replacing them if they already exist on the destination.--append-verify: Append data and verify it with checksums to ensure integrity.--backup: Make backups of files that are overwritten or deleted during the transfer. By default, a~is appended to the backup filename.--backup-dir=DIR: Specify a directory to store backup files.--suffix=SUFFIX: Specify a suffix to append to backup files instead of the default~.--progress: Displays the progress of the transfer, including the speed and the number of bytes transferred. This is useful for monitoring long transfers and seeing how much data has been copied so far.

These options, along with others, provide additional flexibility and control over your rsync transfers, allowing you to fine-tune the synchronization process to meet your specific needs.

4.3 - Software

4.3.1 - Operating system

At present, DAIC and DelftBlue use different software stacks. This includes differences in the operating system (CentOS 7 for DAIC vs. Red Hat Enterprise Linux 8 for DelftBlue) and, consequently, the available modules.

Be mindful that code or environments developed on one system may not run identically on the other. Check the DelftBlue modules and DAIC software pages to avoid portability issues.

Operating system

DAIC runs the Red Hat Enterprise Linux 7 distribution. Most common software—such as programming languages, libraries, and development tools—is installed on the nodes (see Available software).

However, niche or recently released packages may be missing. If your work depends on a state-of-the-art program not yet available for Red Hat 7, you’ll need to install it manually. See Installing software for instructions.

4.3.2 - Available software

General software

Most common general software, like programming languages and libraries, is installed on the DAIC nodes. To check if the program that you need is pre-installed, you can simply try to start it:

$ python

Python 2.7.5 (default, Jun 28 2022, 15:30:04)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> quit()

To find out which binary is used exactly you can use which command:

$ which python

/usr/bin/python

Alternatively, you can try to locate the program or library using the whereis command:

$ whereis python

python: /usr/bin/python3.4m-config /usr/bin/python3.6m-x86_64-config /usr/bin/python2.7 /usr/bin/python3.6-config /usr/bin/python3.4m-x86_64-config /usr/bin/python3.6m-config /usr/bin/python3.4 /usr/bin/python3.4m /usr/bin/python2.7-config /usr/bin/python3.6 /usr/bin/python3.4-config /usr/bin/python /usr/bin/python3.6m /usr/lib/python2.7 /usr/lib/python3.4 /usr/lib/python3.6 /usr/lib64/python2.7 /usr/lib64/python3.4 /usr/lib64/python3.6 /etc/python /usr/include/python2.7 /usr/include/python3.4m /usr/include/python3.6m /usr/share/man/man1/python.1.gz

Or, you can check if the package is installed using the rpm -q command as follows:

$ rpm -q python

python-2.7.5-94.el7_9.x86_64

$ rpm -q python4

package python4 is not installed

You can also search with wildcards:

$ rpm -qa 'python*'

python2-wheel-0.29.0-2.el7.noarch

python2-cryptography-1.7.2-2.el7.x86_64

python34-virtualenv-15.1.0-5.el7.noarch

python-networkx-1.8.1-12.el7.noarch

python-gobject-3.22.0-1.el7_4.1.x86_64

python-gofer-2.12.5-3.el7.noarch

python-iniparse-0.4-9.el7.noarch

python-lxml-3.2.1-4.el7.x86_64

python34-3.4.10-8.el7.x86_64

python36-numpy-f2py-1.12.1-3.el7.x86_64

...

Useful commands on DAIC

For a list of handy commands on DAIC have a look here.

4.3.3 - Modules

In the context of Unix-like operating systems, the module command is part of the environment modules system, a tool that provides a dynamic approach to managing the user environment. This system allows users to load and unload different software packages or environments on demand. Some often used third-party software (e.g., CUDA, cuDNN, MATLAB) is pre-installed on the cluster as

environment modules

.

Usage

To see or use the available modules, first, enable the software collection:

$ module use /opt/insy/modulefiles

Now, to see all available packages and versions:

$ module avail

---------------------------------------------------------------------------------------------- /opt/insy/modulefiles ----------------------------------------------------------------------------------------------

albacore/2.2.7-Python-3.4 cuda/11.8 cudnn/11.5-8.3.0.98 devtoolset/6 devtoolset/10 intel/oneapi (D) matlab/R2021b (D) miniconda/3.9 (D)

comsol/5.5 cuda/12.0 cudnn/12-8.9.1.23 (D) devtoolset/7 devtoolset/11 (D) intel/2017u4 miniconda/2.7 nccl/11.5-2.11.4

comsol/5.6 (D) cuda/12.1 (D) cwp-su/43R8 devtoolset/8 diplib/3.2 matlab/R2020a miniconda/3.7 openmpi/4.0.1

cuda/11.5 cudnn/11-8.6.0.163 cwp-su/44R1 (D) devtoolset/9 :

...

- D is a label for the default module in case multiple versions are available. E.g.

module load cudawill loadcuda/12.1 - L means a module is currently loaded

To check the description of a specific module:

$ module whatis cudnn

cudnn/12-8.9.1.23 : cuDNN 8.9.1.23 for CUDA 12

cudnn/12-8.9.1.23 : NVIDIA CUDA Deep Neural Network library (cuDNN) is a GPU-accelerated library of primitives for deep neural networks.

And to use the module or package, load it as follows:

$ module load cuda/11.2 cudnn/11.2-8.1.1.33 # load the module

$ module list # check the loaded modules

Currently Loaded Modules:

1) cuda/11.2 2) cudnn/11.2-8.1.1.33

Note

For more information about using the module system, runmodule help.Compilers and Development Tools

The cluster provides several compilers and development tools. The following table lists the available compilers and development tools. These are available in the devtoolset module:

$ module use /opt/insy/modulefiles

$ module avail devtoolset

---------------------------------------------------------------------------------------------- /opt/insy/modulefiles ----------------------------------------------------------------------------------------------

devtoolset/6 devtoolset/7 devtoolset/8 devtoolset/9 devtoolset/10 devtoolset/11 (L,D)

Where:

L: Module is loaded

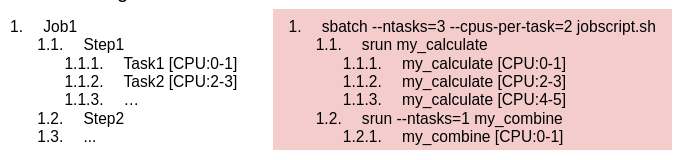

D: Default Module